This is an update of a post that originally appeared on February 17, 2014.

A number of my books tell the reader to perform tasks at the command line. What this means is that the reader must have access to applications stored on the hard drive. Windows doesn’t track the location of every application. Instead, it relies on the Path environment variable to provide the potential locations of applications. If the application the reader needs doesn’t appear on the path, Windows won’t be able to find it. Windows will simply display an error message. So, it’s important that any applications you need to access for my books appear on the path if you need to access them from the command line.

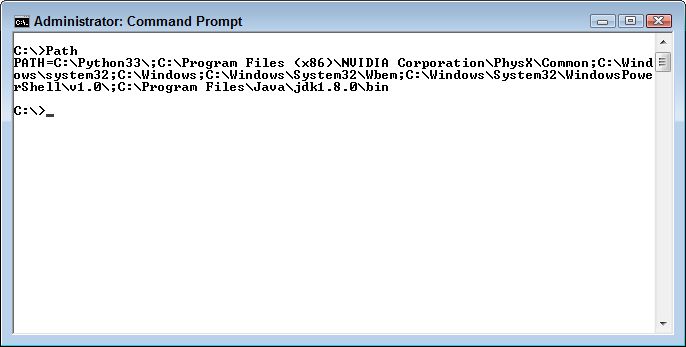

You can always see the current path by typing Path at the command line and pressing Enter. What you’ll see is a listing of locations, each of which is separated by a semicolon as shown here (your path will differ from mine).

In this case, Windows will begin looking for an application in the current folder. If it doesn’t find the application there, then it will look in C:\Python33\, then in C:\Program Files (x86)\NVIDIA Corporation\PhysX\Common, and so on down the list. Each potential location is separated from other locations using a semicolon as shown in the figure.

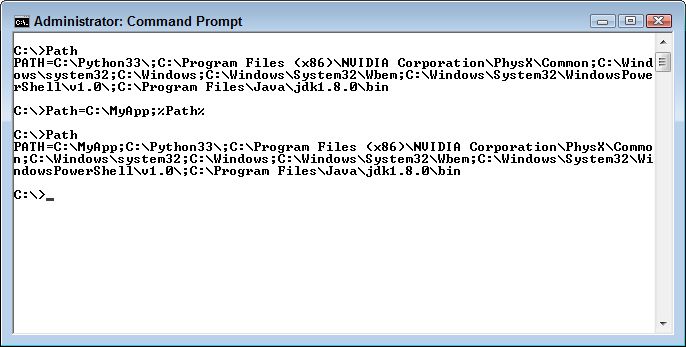

There are a number of ways to add a location to the Windows path. If you only need to add a path temporarily, you can simply extend the path by setting it to the new value, plus the old value. For example, if you want to add C:\MyApp to the path, you’d type Path=C:\MyApp;%Path% and press Enter. Notice that you must add a semicolon after C:\MyApp. Using %Path% appends the existing path after C:\MyApp. Here is how the result looks on screen.

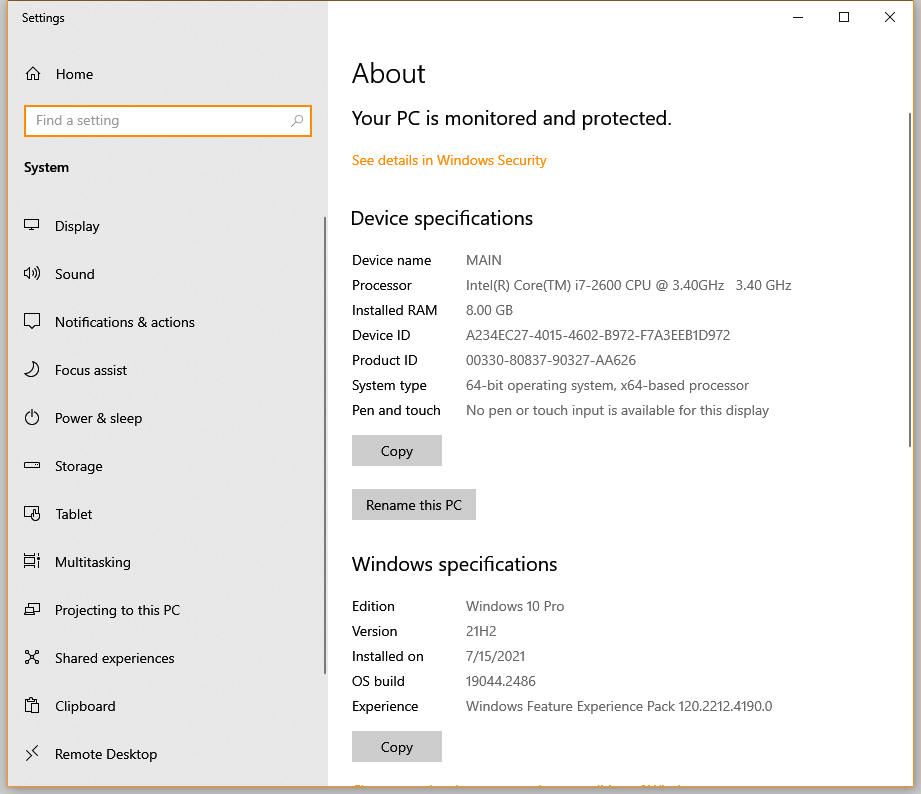

Of course, there are times when you want to make the addition to the path permanent because you plan to access the associated application regularly. In this case, you must perform the task within Windows itself. The following steps tell you how.

1. Right click This Computer (or Computer) and choose Properties from the context menu or select Settings (or System in the Control Panel). You see the a Settings (System) window similar to the one shown here open (precisely what you see depends on which version of Windows you have, the figure shows Windows 10).

2. Click Advanced System Settings. You see the Advanced tab of the System Properties dialog box shown here.

3. Click Environment Variables. You see the Environment Variables dialog box shown here. Notice that there are actually two sets of variables. The top set affects only the current user. So, if you plan to use the application, but don’t plan for others to use it, you’d make the Path environment variable change in the top field. The bottom set affects everyone who uses the computer. This is where you’d change the path if you want everyone to be able to use the application.

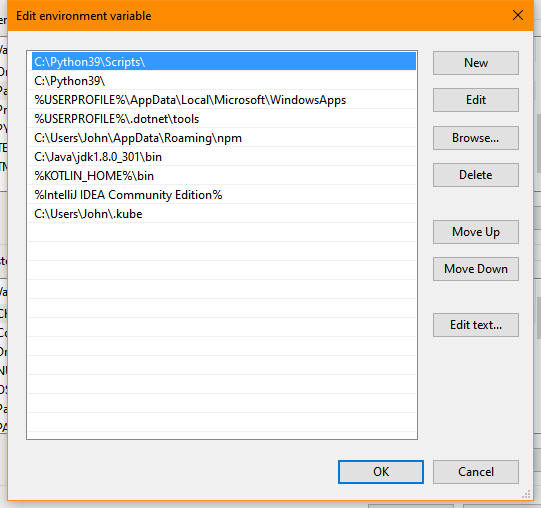

4. Locate the existing Path environment variable in the list of variables for either the personal or system environment variables and click Edit. If there is no existing Path environment variable, click New instead. You see a dialog box similar to the one shown here when working with Windows 10 (other versions of Windows will show a different dialog box, but the purpose is the same, to edit the path).

When you open a new command prompt, you’ll see the new path in play. Changing the environment variable won’t change the path for any existing command prompt windows. Having the right path available when you want to perform the exercises in my books is important. Let me know if you have any questions about them at [email protected].