Microsoft has thrown developers yet another curve—Windows 8 will rely on HTML5 and JavaScript for a programming interface. The revelation has many developers horrified. For me, it means updating my HTML and JavaScript skills, which was one motivation for reading the book reviewed in today’s post. HTML5 Step by Step, written by Faithe Wempen, provides a quick method of getting up to speed on HTML5.

This book is designed to aid anyone who wants to know how to work with HTML5, which means that it starts out extremely simple. The book avoids the ever popular Hello World example, but the example it does provide is small and easily understood. The chapters don’t remain simple, however, so even if you have some experience with HTML, you can use this book to update your skills. You’ll likely want to start around Chapter 3 if you are experienced and skim through the material until you come to something unfamiliar, which could happen relatively fast given the changes in HTML5.

HTML5 Step by Step is light on theory and reference information, but heavy with hands on experiences. It relies on using Notepad as an editor, which may seem like an odd choice, until you read the “Why Learn HTML in Notepad?” section of the Introduction. The author’s reasoning is akin to the same reasoning I would use, which is to make sure that the reader types everything and understands why a particular element is required. If you really want to get the most out of this book, you have to do the exercises in Notepad as the author suggests. Otherwise, I guarantee you’ll miss out on something important. Faithe has made a great choice of teaching aids in this case.

Chapter 1 is most definitely designed for the rank novice. It even shows how to set up the examples directory as a favorite in Notepad. However, unlike many books, the rank novice should read the book’s Introduction because Faithe does provide some needed information there, such as the “Understanding HTML Tags” section.

Chapter 2 gets the reader started with some structural elements. Faithe covers everything that the reader is likely to need for a typical starter Web page. I wish that the chapter had covered <meta> tags in a little more detail, or at least provided a table listing them, but this book does have an emphasis on hands on exercises, so the omission isn’t a glaring one. As an alternative to including the information, an update could provide a URL that lists the tags so the reader knows where to go for additional information.

By Chapter 3, the reader is formatting text and starting to make the sample site look pretty. I really thought Faithe did a nice job of moving the reader along at a fast, but manageable pace. She shows the reader how to make effective use of tag combinations, such as the <kbd> (keyboard) and <b> (bold) tags.

There is the smallest amount of reference information in some chapters. For example, Chapter 4 contains a table on page 50 showing the list attributes. These references are very small and quite helpful, but the reader should understand that the emphasis is on doing something and that the reference material may not be complete. For example, the special symbols table on page 56 is missing the em dash, which is something most people use.

The book progresses at a reasonable pace. Never did I find myself rushed. The examples all seem to work fine and I didn’t find missing steps in the procedures. The author uses an adequate number of graphics so that the reader doesn’t get lost. I liked the fact that every exercise ends with a cleanup section and a list of the major points that the reader should have gotten from the exercise.

Readers who are only interested in new tags will need to wait until Chapter 9 to see one. The <figure> tag makes an appearance on page 141. However, even some professionals didn’t use all of the HTML4 tags and it really does pay to start at Chapter 3 and look for something you don’t recognize. It may surprise you to find that an earlier chapter contains a somewhat new (but not new to HTML5 tag) that you’ve passed by.

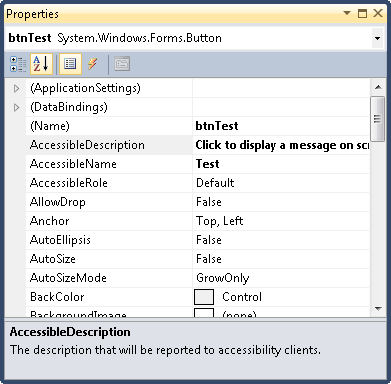

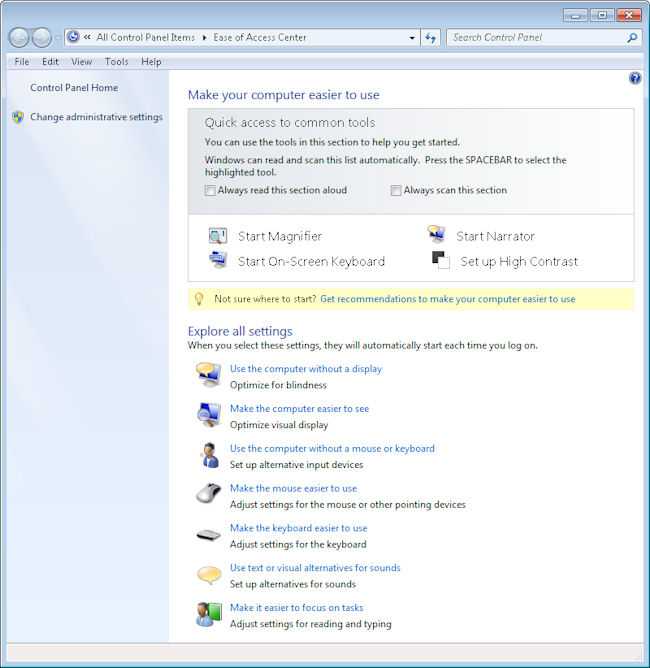

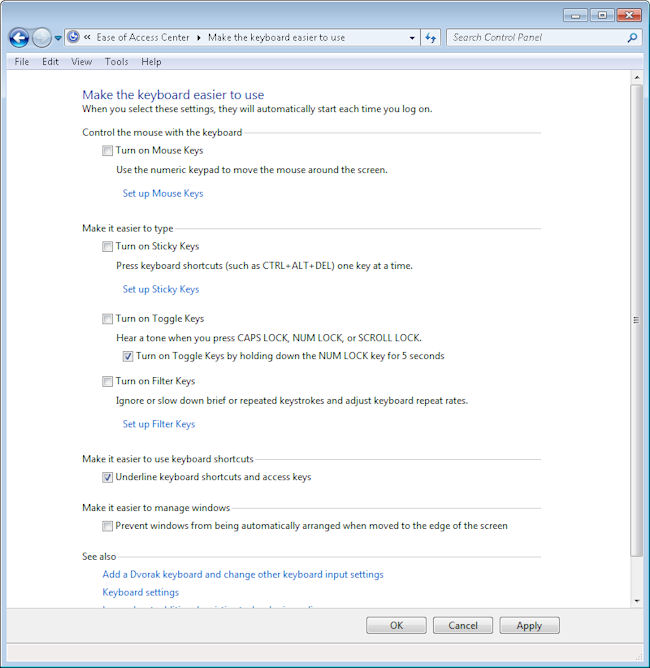

There are a few nits to pick with this book. The first is that the author places the accessibility information in an appendix where almost no one is going to read it. The information should have appeared as part of the rest of the book as appropriate. In addition, the author misses the big point that most people today have some sort of special need addressed by accessibility aids. The number of people who are colorblind alone is 8 percent of the male population and 0.5 percent of the female population. This book is unlikely to help you create a truly accessible site—not that this is the reason you’re buying the book.

The second is that Appendix C doesn’t really help very much with the additions and subtractions for HTML5. For example, Appendix C doesn’t tell you about the new <aside> tag. If you want a quick list of the new tags, check out the www.w3schools.com HTML5 New Elements page. (I checked the missing <aside> tag against a number of other sites, such as About.com.) The point is that Appendix C won’t give you the complete picture. Again, this isn’t one of the selling points of the book, but the list should have been complete.

The third is that there isn’t really enough information about why something is done or why it changed—simply that it must be done or that it did change. The reader probably doesn’t want a full blown history of the change, but the why of something can make understanding and, more importantly, remembering a concept easier. Still, this particular nit is minor since the purpose of the book is to get you started with HTML5 quickly and not to explore it at a theoretical level.

Overall, HTML5 Step by Step is a great book for the novice who wants to learn how to create Web pages. It’s also an acceptable book for someone who’s experienced with HTML coding, but wants to get up-to-date on the HTML5 changes quickly. This book is definitely designed for someone who wants to do something, rather than simply read about it. If you don’t perform the exercises, you won’t get much out of the book.

![]() !

!